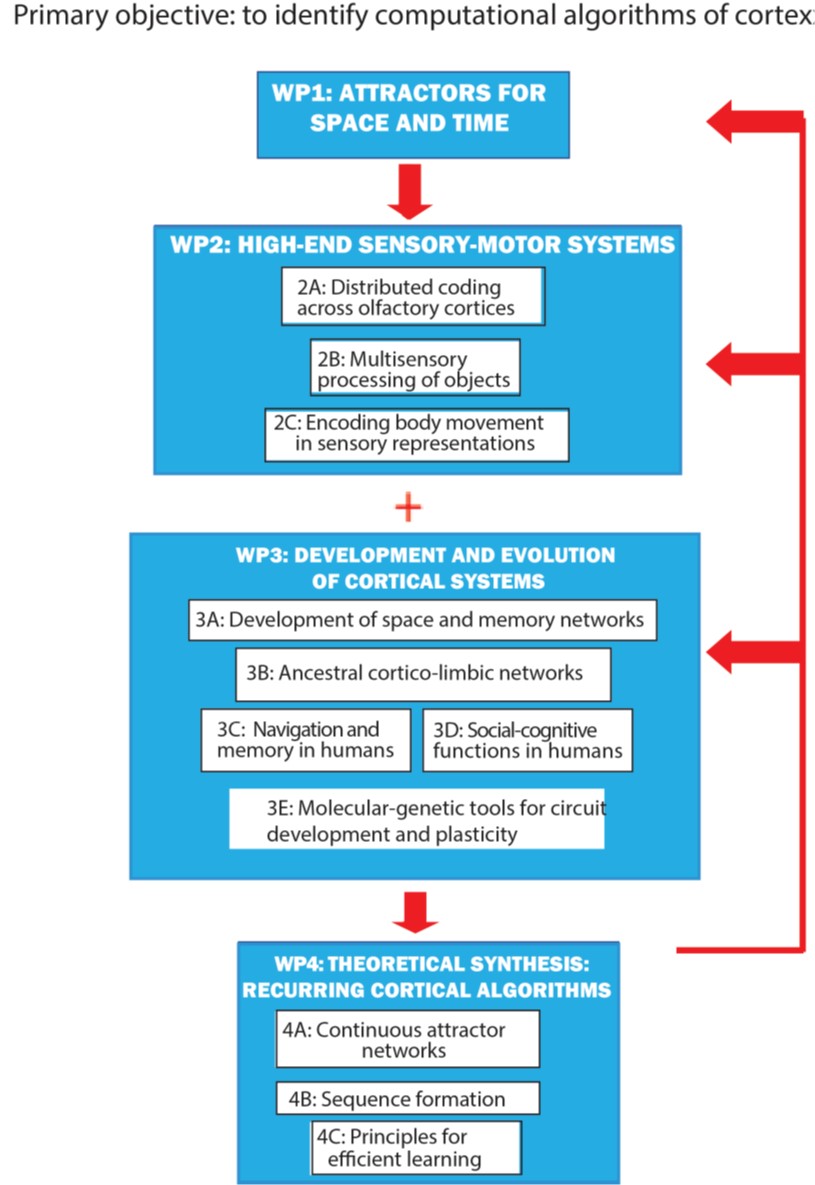

WP2C: Embodied sensation and cognition - The Kavli Institute for Systems Neuroscience

WP2C: Embodied sensation and cognition

About

Motivation

In making a model of the world, the brain simultaneously generates a model of the individual acting in it via looped feedback architectures in which motoric output is re-generated at the first stages of sensory computation. Motivated by our recent findings that neural signals for 3D pose and movement (Whitlock

CV, ref 4) pervade sensory areas including V1, we will determine how sensory and motor representations coexist

in the same cortical cell populations (P1,P2), and are coordinated across cortices (P4).

Aims

Determine how pose and movement signals are integrated across low- and high-level sensory systems:

- 2.5 Determine how visual and auditory cortices jointly represent visual or auditory stimuli along with posture and movement in unrestrained rats, and assess wheter those representations take the form of attractors (P1)

- 2.6 Determine how postural signals are coordinated between cortical regions over long distances (P4)

- 2.7 Determine hos visual and body representations are shaped into sequences during learning (P2,P3)

Implementation

We will combine 3D body tracking and gaze reconstruction with Neuropixels recordings to determine how natural movements modulate neural population coding and potential attractor dynamics in V1 and A1 activity (WP4C; P1).

We will compare with other systems (WP2AB, 5A) and, with 2P miniscopes, assess contributions of specific cell-types.

We will then record from sensory and motor areas simultaneously to examine temporal organization via fast neural oscillations and traveling waves (P4).

Finally, we will monitor the emergence of sequential firing in posterior parietal and prefrontal cortex while animals learn to use bodily movement to report visual working memory decisions (P2,3; WP2A,5B, compare with WP3C).